by Manel Soengas

The European Union’s Artificial Intelligence (AI) Act establishes a regulatory framework to govern the use of AI across Europe. This legislation classifies AI systems according to their level of risk and sets specific restrictions to protect fundamental rights, safety, and privacy. In the educational field, there are platforms that use AI to personalize learning, automate exam grading, and support academic management tasks. The aim of this article is to analyze how this regulation impacts education and which uses of AI will be allowed, restricted, or prohibited.

Figure 1: Image generated with generative artificial intelligence

The EU AI Act: An Overview

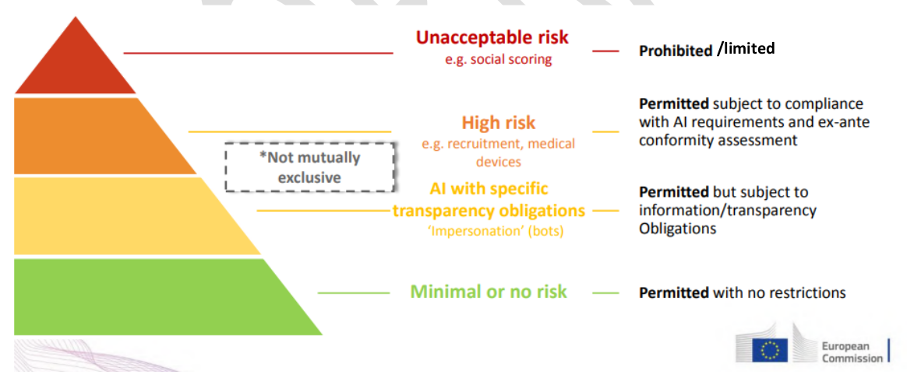

The European AI Act classifies AI applications into four risk categories:

- Unacceptable risk: systems that are prohibited because they are considered a threat to fundamental rights.

- High risk: applications that may affect the safety or rights of individuals and will require strict evaluations.

- Limited risk: systems with transparency requirements to ensure appropriate use.

- Minimal risk: applications that do not pose significant danger and can be used freely.

The AI Act classifies applications into four levels of risk: unacceptable, high, limited, and minimal

AI in Education: Where Is It Being Applied?

AI is currently being used in various areas of education through platforms and the use of language models:

- Personalized learning: tools like Khan Academy with AI adapt content to students’ individual needs.

- Automated assessment: systems that grade multiple-choice tests and even written responses.

- Virtual tutors: AI assistants that support students with questions across different subjects.

- Academic management platforms: AI applied to detecting early school dropout or improving resource planning.

- Use of generative artificial intelligence to create learning activities through language models (LLMs).

Based on this scenario, different types of uses emerge depending on the context:

- Administration: Although it does not implement AI systems directly, it has developed guidelines for educational institutions titled “Guidelines and Recommendations for the Use of Artificial Intelligence in Educational Centers” (AI Act). It also organizes teacher training courses and compiles and shares best practices and resources through a corporate website.

- Educational institutions: These combine the use of platforms and language models to carry out administrative and management tasks, such as creating schedules, managing attendance, or generating document templates, among others.

- Teachers: They use AI-based tools to prepare teaching materials, manage administrative tasks, or personalize learning, among other uses.

- Students: They primarily use generative AI to produce content, support their studies, or practice languages, to name a few examples.

Risks and regulation of AI in education according to the law

The growing use of artificial intelligence in education offers great opportunities, but it also presents challenges that require clear regulation. Without an appropriate legal framework, the use of AI in assessment, personalized learning, or data management can lead to bias, infringe on student privacy, and compromise equity or transparency in educational and learning processes.

It is also essential to ensure that these technologies support the work of educators rather than replace their pedagogical role. For this reason, specific regulation in the field of education is important to ensure the ethical, responsible, safe, and equitable use of AI in education.

The AI Act establishes that some applications may be considered high-risk, while others will be acceptable under specific conditions:

Teachers and students are already using AI to create content, but it is to consider the legal and ethical limits of its use

- Prohibited applications (unacceptable risk): The commercialization, deployment for this specific purpose, or use of AI systems to infer the emotions of a natural person in the workplace or educational institutions is banned, except when the AI system is intended to be implemented or marketed for medical or safety purposes.

- High-risk applications (require prior evaluation): Education and vocational training.

- AI systems intended to be used to determine access, admission, or assign individuals to educational and vocational training institutions at all levels.

- AI systems intended to be used to assess learning outcomes, even when those outcomes are used to guide the learning process in educational and vocational training institutions at all levels.

- AI systems intended to be used to evaluate the appropriate educational level a person will receive or can access, within the context of educational and vocational institutions at all levels.

- AI systems intended to be used to monitor and detect prohibited student behavior during exams in or by educational and vocational training institutions at all levels.

- Minimal-risk applications: The AI regulation does not set specific requirements for systems considered to carry minimal or no risk. Most AI applications currently in use in the EU fall into this category, such as video games with AI functionalities or automatic spam filters.

These systems are subject to strict obligations to ensure their safety and reliability. Some of the requirements they must meet include: conformity assessment, risk management, data quality, technical documentation, transparency and user information, human oversight, and cybersecurity.

Figure 2: European AI Regulation.

The AI Act was approved in 2024 and will undergo a phased implementation over the coming years. According to the planned timeline, it will be fully applied to all relevant actors by 2027.

Implications for Teachers and Educational Institutions

The legal framework established by the European Parliament requires governments to implement the law gradually. However, until it is fully applied, it is important to consider certain scenarios:

- The use of an LLM (language model) is subject to age restrictions, which directly affects students. In some cases, parental or legal guardian consent may be required.

- Regarding applications or platforms based on LLMs, such as ChatGPT, it is recommended not to use them as evaluation tools. There is no prior assessment that certifies, validates, or authorizes this use. This does not mean that LLMs have no educational value; they can be used as support tools.

- When it comes to AI-based tools or resources, it is essential to verify where the data is stored, whether it is shared with third parties, who has access to it, and whether the data or conversations are used to train and improve the model.

Educational institutions must review data security before introducing tools based on generative AI

Although the General Data Protection Regulation (GDPR) already establishes strict rules, the Artificial Intelligence Act (AI Act) has not yet been fully implemented. This legal gap requires vigilance to ensure that AI-based tools or resources comply with the standards set by the GDPR and are aligned with future AI Act regulations.

For users and educational institutions, these aspects often go unnoticed due to their legal complexity, which may pose risks to data protection and privacy. In light of this scenario, it is recommended that, before using a generative AI model (LLM) or contracting an AI-based service, the following actions be taken:

- Review privacy policies to check whether data is used to train the model or shared with third parties.

- Prioritize AI tools that comply with the GDPR and provide guarantees regarding data processing.

- Verify whether the provider is subject to EU regulations and whether it is taking steps to align with the AI Act.

- Avoid services that are not transparent about how data is used and stored, especially in educational settings.

- If the service is intended for minors, ensure it complies with data protection regulations for children and does not require unnecessary personal information.

Conclusions and Future Outlook

The European AI Act will mark a turning point in the use of AI in education. While some applications will be restricted to protect students’ rights, many others have the potential to positively transform learning. Teachers and institutions will need to adapt to this new legal framework to ensure the ethical and responsible use of AI. Training in AI and effective regulation will be key to harnessing its potential without compromising the fundamental principles of education.

Manel Soengas is a technology teacher, industrial engineer, and holds a master’s degree in AI. He currently works as a technology manager in the ICT department of the Ministry of Education, combining this role with training in digital culture, outreach, communication, and advisory work related to artificial intelligence.